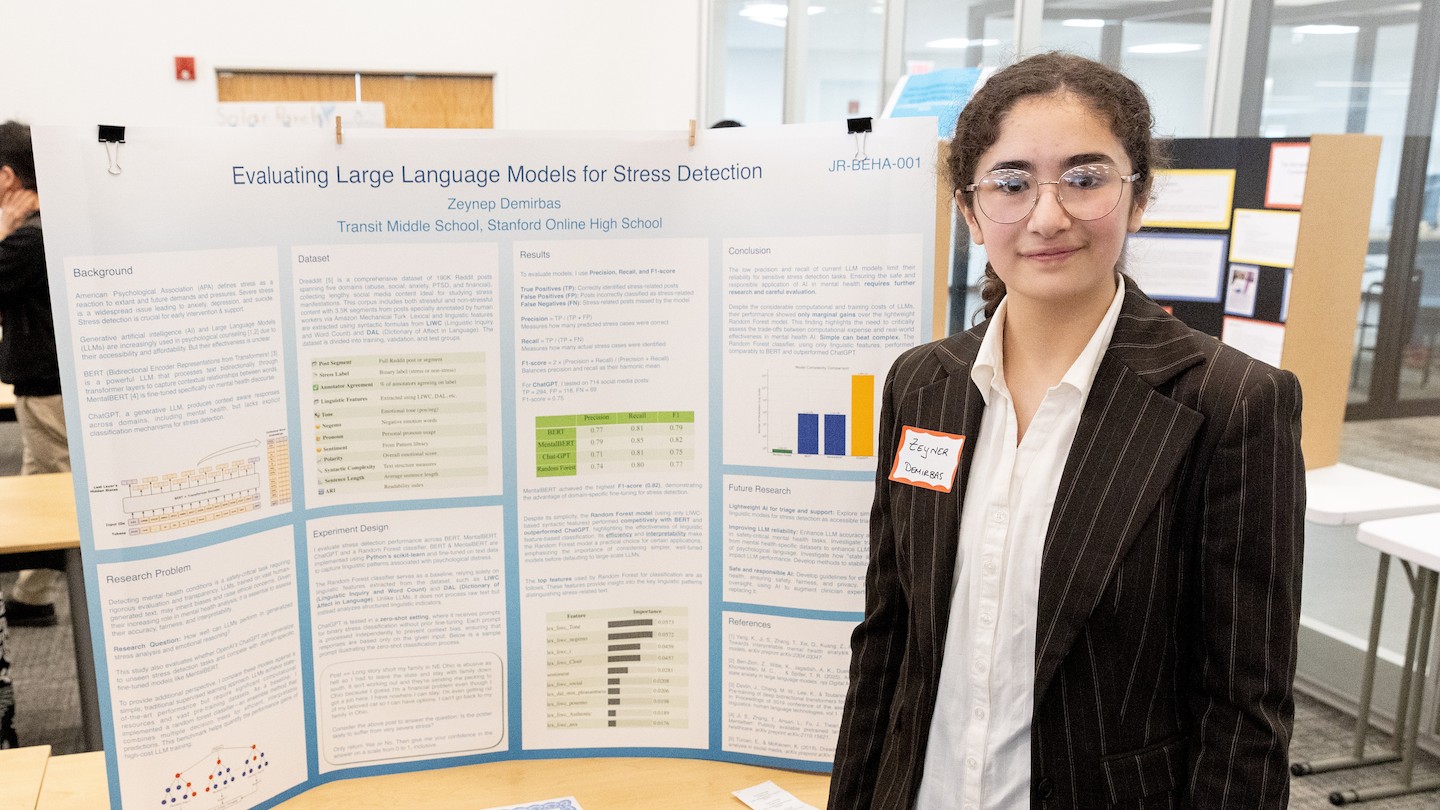

A middle school student’s experience with an artificial intelligence (AI) chatbot has highlighted concerns about relying on AI for mental health support. The student, who competed in the Thermo Fisher Scientific Junior Innovators Challenge—a competition for middle school students run by the Society for Science—found that an AI model offered potentially harmful advice, raising questions about the trustworthiness of these tools for managing emotional well-being.

Understanding the Tools at Play

Before diving into the specifics of the student’s findings, it’s helpful to understand some of the key technologies involved.

- Artificial Intelligence (AI): AI refers to systems that exhibit intelligent behavior, often through complex algorithms. These algorithms allow machines to learn from data and make decisions.

- Large Language Models: A specific type of AI, large language models, focus on understanding and generating human language. They’ve been trained on massive datasets of text and speech, allowing them to predict words and phrases and respond to prompts in a remarkably human-like way. Think of them as incredibly sophisticated prediction engines for language.

- Decision Trees & Random Forest Models: These are learning algorithms used to classify information or make predictions. A decision tree asks a series of questions, narrowing down possibilities with each answer, while a random forest model combines multiple decision trees for a more robust result.

- Parameters: These are the measurable conditions or variables within a system that can be adjusted or studied. Tuning these parameters can optimize the model’s performance.

The Student’s Experiment and Its Implications

The student was exploring how AI could be used to provide mental health support, aiming to develop a reliable system. They focused on a chatbot designed to address stress and offer coping strategies. While acknowledging the potential of AI, the student’s testing revealed a concerning issue: the chatbot sometimes provided advice that was inappropriate or even potentially harmful.

This finding underscores the limitations of current AI models in handling the nuances of human emotion and mental health. The AI model didn’t consider the complexities of individual circumstances or potential triggers, instead offering generic responses that could be counterproductive.

Why This Matters

The findings are especially relevant given the increasing accessibility of AI-powered mental health apps and chatbots. While these tools can offer convenient and affordable support, they should not be considered a replacement for professional guidance from a psychologist or other mental-health expert. Here’s why:

- Lack of Contextual Understanding: AI models operate based on patterns in data. They struggle to grasp the full context of an individual’s situation, leading to advice that may not be suitable. The impact of psychological stress can be either positive or negative.

- Potential for Bias: AI models are trained on data, and if that data reflects biases (related to gender, socioeconomic status, race or other factors), the AI may perpetuate those biases in its responses.

- Risk of Harmful Advice: As the student’s experience showed, AI can provide advice that is inaccurate, insensitive, or even harmful. A symptom can be a sign of any of many different injuries or diseases.

Looking Ahead

The student’s work serves as a cautionary tale about the need for careful evaluation and regulation of AI in mental health. Before relying on AI for emotional support, it’s crucial to remember that these tools are still under development and have significant limitations.

The goal shouldn’t be to replace human interaction with AI, but to use AI responsibly as a support tool under the guidance of qualified professionals.

Ultimately, human empathy, clinical judgment, and a deep understanding of the complexities of mental health remain essential for providing effective support. AI has the potential to be a valuable tool in this field, but it must be approached with caution and a clear understanding of its limitations.